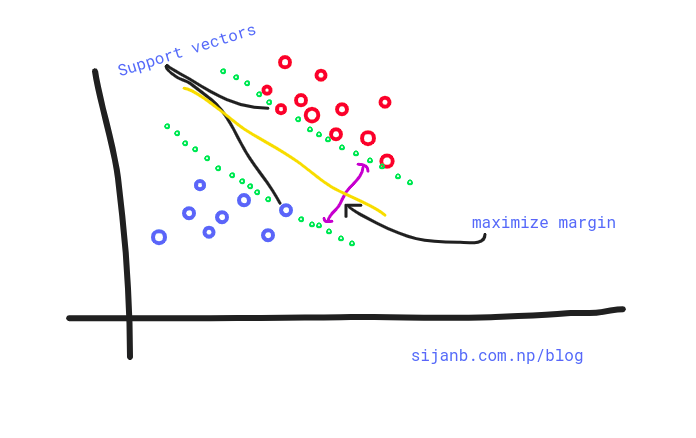

We use the kernel trick to map the data into a higher dimensional space where we can approach the problem using a Linear SVM.Ĭonsider this example where a linear classifier would perform poorly. We can construct non-linear Support Vector Machines to tackle problems where the data is better divided by a non-linear boundary. Hard-margin SVMs are prone to overfitting (especially when there are outliers in the training data) due to the sole emphasis on maximizing the margin. I think we can find the proportion that maximize the distance of the SVM( the distance of hyperplane) for e.g : contamination proportion 0.1 -> distance of SVM is 0.1(normalized for data range, 0 to 1) contamination proportion 0.5. Since very few of us actually calculate the hyperplane formula by hand, the majority of Support Vector Machines actually assume that the data is not linearly separable.īut what if I know for a fact that the problem is linearly separable? Oftentimes, the soft-margin SVM will perform better than the hard-margin SVM even if that is true.

Svm hyperplan maximize distance software#

Most software packages, including Sklearn, implement a soft-margin SVM. Should I use hard margin or soft margin for my modeling problem?

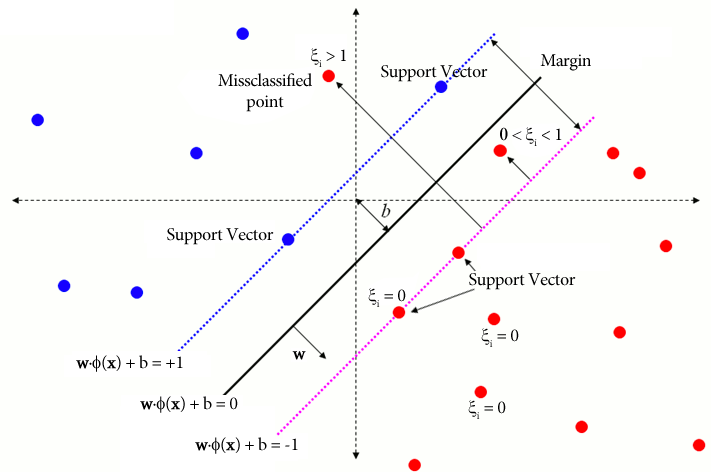

The best way to choose \(C\) is by tuning the hyperparameter (train several SVMs with varying \(C\) values and select the value which yields the best performance). In other words, as \(C\) approaches zero, the algorithm will behave similarly to a hard-margin SVM. A small value of \(C\) will prioritize finding the maximum margin hyperplane over performance. For large values of \(C\), the algorithm will prioritize performance over margin width. The \(C\) parameter determines the tradeoff between maximizing the margins and minimizing the hinge loss. When the observation is misclassified (found on the wrong side of the hyperplane), the hinge loss function evaluates to the distance from the hyperplane. The error is represented by the hinge loss function which evaluates to 0 if the observation is classified correctly (found on the right side of the hyperplane). The objective function is two fold: we wish to minimize the error while trying to increase the distance between the margins. This is the case when the training data is not linearly separable (or when we think that subsequent observations will not be linearly separable).

The maximum margin hyperplane will lie in the middle of these two hyperplanes. The objective is to find the hyperplanes with the biggest margin. If we assume that the training data is linearly separable, then we can select two parallel hyperplanes to mark the boundaries of either class. The optimization objective and constraints depend on one important assumption. Finding the optimal hyperplane essentially becomes an optimization problem. The margin represents the region enclosed between the two closest points from either class. This is known as the maximum margin hyperplane. The objective of a linear Support Vector Machine is to find the hyperplane that results in the maximum distance between the two classes.

0 kommentar(er)

0 kommentar(er)